The Great Deception of “Quality” and the New Algorithmic Religion

We are told, with a paternalistic tone, that to rank in the SERPs (Search Engine Results Pages), we must prove we are certified experts, possess direct and tangible experience, be authorities recognized by the community, and sources of unshakeable truth. But there is a small, negligible detail that often escapes polished conferences and official webinars: how do you prove all this when you are a deserted island in the middle of the digital ocean? How do you maximize E-E-A-T when you don’t have those precious backlinks from the New York Times or the BBC, when you lack academic citations on Google Scholar, or worse, when you are the only original source of information in a world that rewards consensus and repetition?

Google’s official narrative, often conveyed through channels like Search Central, suggests that Artificial Intelligence is not the enemy, provided the content is “helpful” and created to “help people.” But practice, observed through the digital corpses of sites deindexed during the “Spam” and “Core” updates of August and December 2025, tells a story of systemic collateral damage, where legitimate small business sites are wiped out while industrially generated AI spam portals thrive undisturbed for months. It is in this dystopian scenario that the modern SEO consultant must operate. No longer as a gardener cultivating content hoping for sun, but as a defense lawyer in a Kafkaesque court, forced to fabricate evidence of existence and expertise to convince an algorithmic judge who has never possessed a physical body.

This report is not the usual collection of trivial tips suggesting you “add an author bio” or “get natural links.” It is a brutal, exhaustive, and technically detailed analysis of how to hack—in the philosophical and engineering sense of the term—the concept of algorithmic trust. We will explore how to build an impregnable castle of authority using only internal bricks, leveraging obscure patents like Information Gain to turn a lack of external sources from a fatal weakness into a strategic superpower, and how to use semantics and structured data to scream at Google “I am the Entity,” without needing anyone to point a finger at us. Get ready to unlearn much of the “White Hat” banality sold to you for years, because in the AI era, doing exactly the opposite of what Mountain View’s common sense suggests is often the only path for the biological survival of your website.

The Lie of Experience and the Death of the Backlink as the Sole Currency

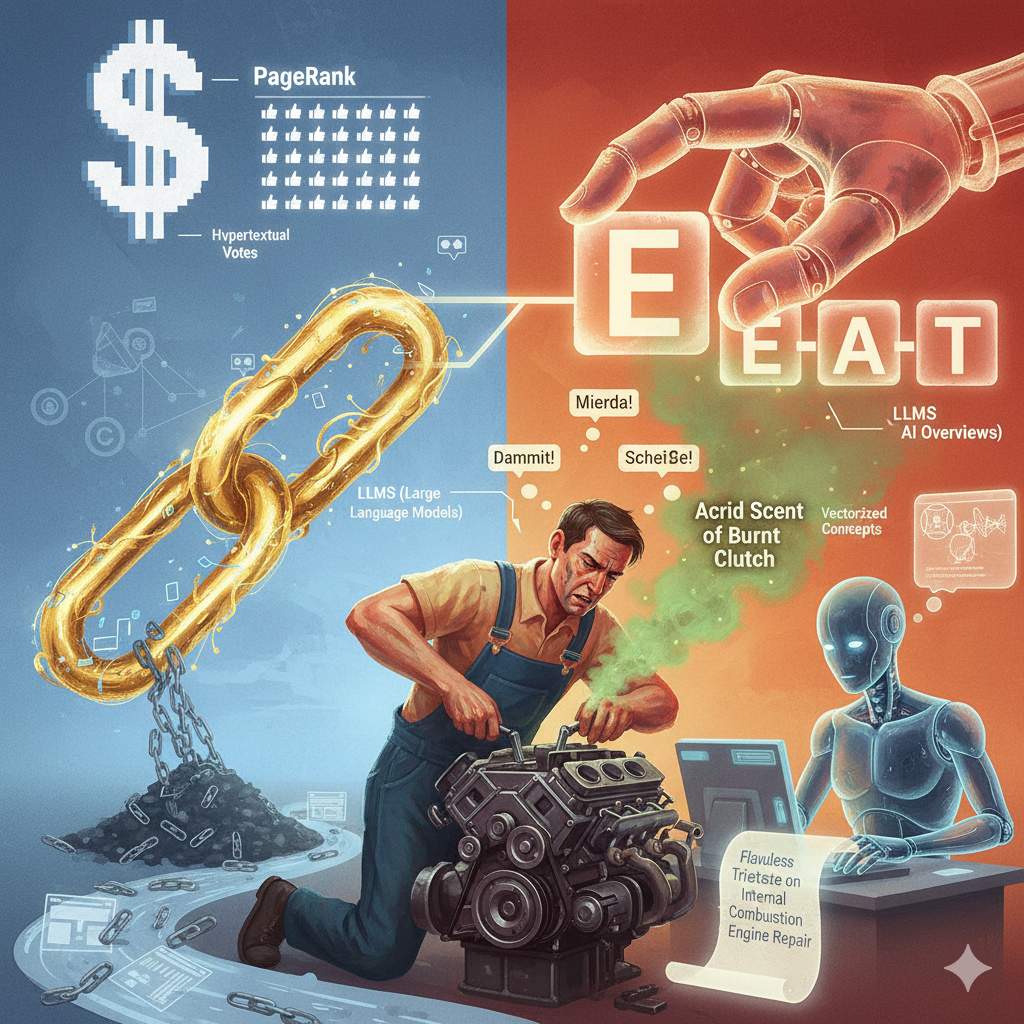

For decades, the reserve currency of the World Wide Web has been the link. A backlink was considered a vote of confidence, a hypertextual “like” that transferred PageRank and authority from one node of the network to another. If you didn’t have links, you didn’t exist; you were digital dark matter. But in 2025 and 2026, with the advent of Large Language Models (LLMs) and the transformation of search into a conversational experience dominated by AI Overviews and Search Generative Experience, the intrinsic value of the link is undergoing a grotesque and irreversible mutation. Google knows perfectly well that links can be bought, traded, and manipulated via Private Blog Networks (PBNs) and low-quality guest posts. Artificial Intelligence, on the other hand, does not “click” on links the same way a human user does; AI “reads,” “understands,” and “vectorizes” concepts, looking for semantic connections rather than simple hypertextual votes.

The ultimate irony is that while Google continues to publicly state that links are important, its systems are rapidly evolving to reward something much harder to fake or buy by the pound: Experience. That “E” added almost reluctantly to the E-A-T acronym in 2022 has become the centerpiece of quality evaluation. Experience is the true Achilles’ heel of generative AI. ChatGPT or Gemini can write a flawless treatise on internal combustion engine repair in seconds, but they have never had grease on their hands, never smelled the acrid scent of a burnt clutch, and never cursed in three different languages because a bolt was stripped in an inaccessible position.

The “Source Zero” Paradox and Strategic Self-Citation

Here we reach the beating heart of our problem: how to maximize E-E-A-T when you have no external sources to cite or that cite you. Conventional SEO wisdom, repeated like a parrot in every basic course, says: “Cite your sources to be credible.” Google itself states in its guidelines that “transparency about sources is a sign of reliability.” But if we follow this advice to the letter, we find ourselves in a deadly logical trap. If we always cite someone else, we implicitly admit that the real authority resides elsewhere. We become aggregators, curators, librarians, but not creators. And in a world where AI is the ultimate aggregator, capable of synthesizing the entire human knowledge in milliseconds, being a human aggregator is a sentence to oblivion and redundancy.

The advanced strategy, which we will define as “Source Zero,” consists of positioning yourself not as the one who has read the books, but as the one who is writing them in real-time. When you have no external references, you must become the primary reference. This requires a radical and courageous shift in the rhetorical structure of the content. Instead of writing phrases like “According to a Harvard study…”, the narrative must transform into “In our internal analysis conducted on a sample of 500 clients…”. Even if your dataset is tiny, even if it is based only on your personal experience with ten clients, it is your data. It is unique. It is unrepeatable. It is what Google’s patent on “Information Gain” desperately seeks in a sea of duplicate content.

Patent US20200349181A1, widely discussed in technical SEO circles but often ignored by generalists who stop at blog headlines, describes an “Information Gain” score that rewards documents providing additional information compared to what the user has already seen in previous results. It’s not about being longer or more complete, as many mistakenly believe in the “word count” race. It’s about being different. If ten sites on the first page say the sky is blue, and you write a 5,000-word article about the sky being blue citing those ten sites, your Information Gain is zero. AI will ignore you because you add nothing to the knowledge graph. But if you write that the sky appears purple under certain specific atmospheric conditions that only you have observed and documented with a grainy photo from your phone, your Information Gain skyrockets. The algorithm, hungry for novelty to train its models and provide varied answers in AI Overviews, will prioritize your imperfect but original content over the recycled perfection of your competitors.

The Irony of Google “Quality” and the Massacre of the Innocents

It is macabrely amusing to note how Google preaches the importance of “people-first” content, yet its algorithmic updates often decimate sites run by true enthusiasts in favor of publishing giants full of invasive ads and mediocre content that is “authoritative” only by seniority. The August 2025 Spam update, for example, hit small legitimate businesses hard—like local plumbers or artisans—just because they had old blog comments dating back five years or anchor text that was slightly too optimized, while sites entirely generated by AI continue to rank and steal traffic.

This phenomenon teaches us a fundamental lesson: E-E-A-T is not a measure of objective truth, morality, or the goodness of the author’s soul. It is a series of technical, stylistic, and structural signals that we must simulate perfectly. If Google is a blind bureaucrat robot that can only “feel” digital documents, we must fill out the forms exactly as it wants, presenting the form of truth, even if the content of the form is, in essence, a self-declaration saying “trust me, I’m a doctor.” The lack of external references is not a problem of substance, but a signal problem that can be compensated for by amplifying the noise of internal signals until they become deafening.

Internal Authority Architecture and the Power of the Self-Referential “Cluster”

When you cannot import authority from the outside in the form of backlinks, you must build and cultivate it from within, creating a closed ecosystem that feeds itself. Imagine your website not as a scattered collection of pages, but as a closed crystalline structure, a hall of mirrors where the light of PageRank (or “link juice”) is reflected, refracted, and amplified infinitely until it appears much more powerful than its original source. This is the theory behind Topic Clusters taken to their extreme consequences, a strategy we might call “Entity Stacking On-Page.”

The Topic Cluster as a Relevance Nuclear Reactor

Most SEOs create “Topic Clusters” in a lazy and academic way: they write a generic pillar page and five or six short articles linked to it. To maximize E-E-A-T without external help, we must be much more aggressive and structured. Each cluster must be conceived as a complete doctoral thesis. The Pillar Page is not a simple summary or an index; it is the manifesto, the statement of intent, the unified theory of your subject. And the satellite pages (Cluster Content) are not just deep dives; they are the forensic evidence, the case studies, the granular data supporting the claims made in the pillar.

The dark secret Google will never explicitly tell you lies in the anchor text of internal links. Guidelines suggest using “natural” and varied anchor text. Irony of ironies: empirical studies and field tests prove that descriptive anchor texts, rich in keywords and “exact,” continue to work better for conveying semantic relevance, especially when the link is internal and not subject to the same anti-spam filters as external links. If you have no one out there linking to you with the keyword “best SEO consultant for SaaS,” you must link to yourself, hundreds of times, from every corner of your site, with variations of that exact phrase. Is it narcissistic? Absolutely. Does it work? Incredibly well. It creates such semantic density that it convinces the algorithm that page is the de facto authority on that topic, simply because every other page on the site states it with unshakeable conviction.

In the absence of external votes, internal consistency becomes the only vote that matters. If your site is a vertical encyclopedia on a specific topic, and every page links to others with surgical precision and explanatory anchor text, you create a private and self-sufficient “Knowledge Graph.” Google, in its insatiable hunger to structure the world’s information, will ingest this graph. If it is internally consistent, free of logical contradictions, and dense with connections, the algorithm will tend to accept it as true for lack of better alternatives. It is the principle of “truth by repetition” applied to the science of the semantic web: if you say it enough times and with enough consistency, it becomes a fact for the machine.

The Author Bio: Writing “Expert” Isn’t Enough, You Must Create a Myth

Another point where Google’s irony is palpable is author reputation management. They tell us ad nauseam that author authority is a critical factor, but then we see generic sites ranking at the top with authors named “Admin” or “Editorial Team.” However, to maximize E-E-A-T in 2025/2026 without external sources, we must play their game better than they do, taking it to levels of bureaucratic paroxysm. A simple two-line bio at the bottom of the page is useless; it’s an empty gesture. You need a complete “Entity Home” for the author.

Every author on your site must have a dedicated page structured not as a discursive biography, but as a machine-verifiable Curriculum Vitae. This is where schema markup comes in, which we will cover later, but at the visible content level, this page must scream “Experience” in every pixel. Don’t just list degrees, which AI can invent. List failures. List specific projects with precise numbers. Write sentences like: “I personally managed the SEO migration for a site with 1 million monthly visits and lost 20% of traffic for three months before recovering it thanks to this specific technical fix.” This is a sign of real experience much stronger and more credible than “SEO Expert with 10 years of experience.” Generative AI, programmed to be helpful and positive, rarely admits specific failures and does not invent “war” details so vivid and imperfect unless explicitly asked (and even then, they sound fake and soulless).

The author page must be linked from every single article, and every article must be linked from the author page as part of a dynamic “Portfolio.” We create a closed loop of reputation. If the author has no external presence (no LinkedIn, no Twitter, no conferences), we create the presence on the site itself. We publish “interviews” with the author conducted by the author themselves (perhaps in the form of detailed FAQs on their methodology). It seems absurd and solipsistic, but for a crawler, a well-structured interview page with questions and answers is a valid source of information about the “Person” entity, regardless of who published it. You are providing the raw data Google seeks, packaged exactly how it likes.

Information Gain and the Science of “Different” Content

We return forcefully to the concept of Information Gain, because it is the true cornerstone for those with no external sources to lean on. Google’s patent clearly suggests the algorithm tries to predict what the user will want to know after reading the standard results. If your content is just a “complete guide” repeating exactly what the other ten “complete guides” on the first page say, you are useless to the search engine. You are background noise. You are redundancy that costs computing resources to index.

To maximize this factor without citing Harvard studies or McKinsey reports, you must introduce data, perspectives, or angles that do not exist elsewhere in the indexed corpus. How do you generate this novelty without a research lab and a million-dollar budget?

The first technique is Creative and Improbable Synthesis. Take two known but apparently unrelated concepts and join them into a new theory. Example: “SEO and Jungian Psychology: The Archetypes of Keywords.” No one has statistical data on this; no one has written academic papers on it. Therefore, the moment you publish, you are the absolute and worldwide authority on the subject. You have created a semantic “blue ocean” where you have no competitors. You have generated a new concept that Google must catalog and attribute to you as source zero.

The second technique concerns Proprietary Data (Even if Microscopic). You don’t need a survey of a thousand people commissioned from YouGov. Just analyze your own server logs, your customer support emails, or even conduct an experiment on yourself. Write an article titled: “I tried not using meta descriptions for a month on this site: here is the raw data of what happened.” This is primary data. Google loves primary data more than anything else because it is rare. Even if the statistical sample is irrelevant to science, it is relevant to content uniqueness.

The third technique is the Argued Contrary Opinion. If the SERP is dominated by articles saying “X is good,” write a piece titled “Why X is the Silent Ruin of Your Business.” The algorithm, in its increasingly sophisticated attempt to provide a balanced view or cover the “dialogue turn” in AI interactions, might insert your content precisely because it offers that negative or alternative information gain totally lacking in other results.

The irony here is powerful: Google says it wants “facts” and “scientific consensus” (especially in YMYL topics), but its ranking systems are hungry for “novelty” and “diversity.” Often, a wacky but original theory (as long as it’s not blatantly dangerous or illegal) ranks better than a stale truth repeated a thousand times, precisely thanks to the technical mechanism of Information Gain. Obviously, I am not suggesting you lie blatantly, but to theorize boldly. A theory is, by definition, original content that requires no external citations because it stems from your head and logical processing.

The “Seasonal Take” Case Study: Beating Consensus with Specificity

A clear and documented example of how Information Gain can beat mass consensus is the case of a site that created a “new seasonal approach” to an otherwise hackneyed topic. While giant competitors published the usual generic “evergreen” guides, this site intercepted a specific, temporal, and overlooked “pain point” that no one was treating with proper attention. They created content that answered a question users had only at a precise time of year, providing a different solution than the usual “advice for all seasons.”

The result was a featured snippet won in six months and exponential traffic growth, maintained even during subsequent core updates that penalized more generic sites. This proves you don’t need to be the New York Times or have their domain authority; you just need to say something the New York Times didn’t think to say, or say it at a time when they are distracted covering generalist news. Temporal and contextual specificity is a form of E-E-A-T that requires no backlinks, only timing and user understanding.

Hacking the Knowledge Graph with Schema Markup and Semantics

If visible content is the meat of your site, structured data (Schema Markup) is the skeleton that holds it up. And when you have no one vouching for you from the outside, you must be the one to tell robots exactly who you are, what you do, and why you are important, with obsessive taxonomic precision.

Google often says structured data is not a direct ranking factor. This is one of their most elegant and technically correct lies, but misleading in practice. It’s not a ranking factor in the sense that “more schema = more points,” but it is the fundamental language used to explain to the algorithm what it is looking at. Without schema, Google must guess the context by analyzing unstructured text, an error-prone process. With schema, Google knows. And uncertainty is the sworn enemy of ranking and conversion into Rich Snippets.

The “Person” and “Organization” Entity: The Digital Passport

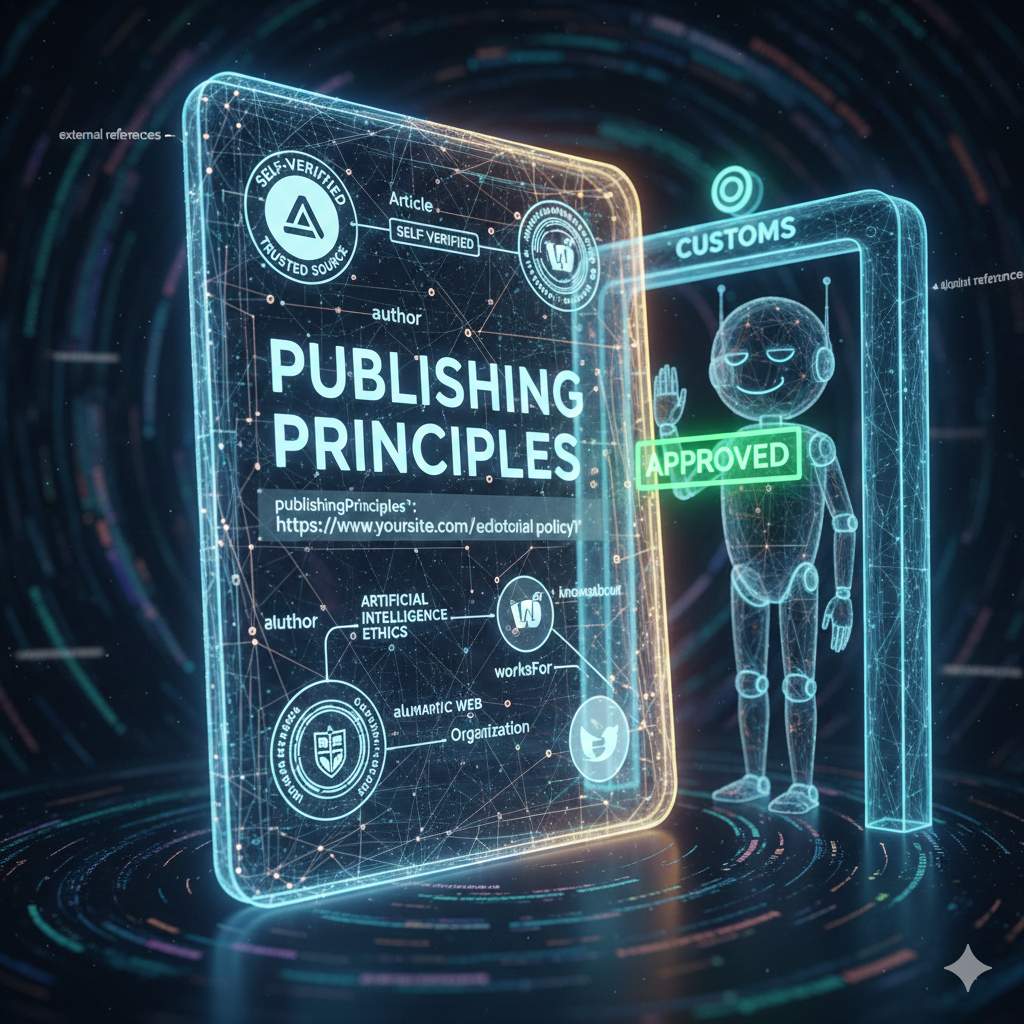

For a site without external references, the implementation of markup Person and Organization is vital, not optional. Don’t limit yourself to standard fields like name and URL. You must use advanced properties like knowsAbout, alumniOf, sameAs (even if they only point to your empty social profiles or pages on your own site, include them).

Here is the advanced trick: use the mentions or citation property within the Article markup to cite yourself or other pages of your site as authoritative sources. Create a loop of structured citations at the code level. Furthermore, use the knowsAbout property to link the author to concepts present in Wikidata or Wikipedia. Warning: you are not saying Wikipedia talks about you (which would be false and ignored), but that you know those specific concepts. It is a one-way semantic association. You are telling the Knowledge Graph: “This node (Author) has a verified expertise relationship with this node (Authoritative Topic).” If you do this consistently across hundreds of pages, the algorithm starts to statistically associate the two entities.

The JSON-LD code must not be a generic copy-paste from a WordPress plugin. It must be nested and complex. The Article entity must have an author which is a Person, who worksFor an Organization, which in turn has publishingPrinciples defined on a specific page of the site. This density of structured information compensates for the lack of external signals. You are providing Google with a “digital passport” so detailed, rich with visas and stamps (even if self-applied), that the algorithmic customs officer, out of laziness, overload, or simple respect for formal compliance, will let you pass.

Particular attention should be given to the publishingPrinciples property. Many SEOs ignore it completely. Creating a page called “Editorial Policy” or “Fact-Checking Standards” where you explain in detail how you verify your information (even if the “facts” are your professional opinions) and linking it in the schema is a massive “Trustworthiness” signal. Google has explicitly said it looks for transparency signals. Formally declaring “I check my sources this way” is a positive signal, even if no one actually checks that you are checking. It is the bureaucracy of trust.

The AI Era and Survival from Spam through Imperfection

2024 and 2025 saw “Spam” updates that looked like indiscriminate carpet bombings. Legitimate sites were deindexed, traffic zeroed, total panic among webmasters. The funny and tragic thing at the same time? Many pure spam sites generated with AI survived and thrive. Why? Because AI often respects Google’s formal rules better than humans. AI structures paragraphs well, uses the right transition words, makes no typos, and maintains a neutral, informative tone. AI is the “perfect” content creator according to the old guidelines.

Doing the Opposite: Imperfection as a Human Signal

If you want to prove you are not an AI and maximize your human E-E-A-T, you must be deliberately imperfect. The ultimate irony is that in 2026, an occasional grammatical error, a convoluted sentence, a strong opinion, or a useless digression could become the most powerful “Humanity” signals at your disposal. AI writes in a flat, aseptic, and perfect “corporate English.” The human voice has peaks, valleys, interruptions, emotions, and, yes, mistakes.

To maximize E-E-A-T, insert “non-scannable” or “difficult” elements for an LLM into your content. The first element is Messy Anecdotes. Tell personal stories that don’t have a clear moral, that end abruptly, or that are only tangentially relevant. AI tends to close everything with a moral summary like “In conclusion, it is important to consider…”. You close with “Anyway, in the end, it didn’t work, too bad.”

The second element is Idiosyncratic Language. Use strange metaphors, colloquialisms, or neologisms that only you use. Create a brand vocabulary. If AI tries to predict the next word (token) based on statistical probability, your goal is to surprise it. Increase the “perplexity” of the text. A text with low perplexity is likely AI. A text with high perplexity and “burstiness” (sudden variations in sentence rhythm and length) is statistically human.

The third element is Low-Quality Original Media. Paradoxically, a grainy, poorly lit photo taken with a cell phone of a hand-scribbled notebook page is a sign of E-E-A-T a thousand times superior to a perfect 4K stock image or an image generated by Midjourney. The “ugly” image shouts “I was there.” It is the forensic proof of physical experience in the real world, something AI cannot easily fake without looking artificial.

Surviving Deindexing: Add, Don’t Remove

If you are hit by an update (which is likely if you are small and without strong links), don’t make the fatal mistake of “pruning” all content as some old-school gurus suggest. Often, deindexing is a “Quality Classifier” problem that has decided the entire site is “unhelpful” or lacks added value. The solution is not to remove content, but to add density and trust signals. Add those detailed “Author” pages, those “Methodology” pages, those descriptive and redundant internal links. And then, force re-indexing by submitting updated sitemaps and using Indexing APIs (if accessible) or simply waiting for the next “refresh” of the Quality Score, which can take months of purgatory.

There is an interesting case study of a site hit by the spam update that recovered its positions not by deleting pages, but by rewriting the AI-generated parts with a more human, polemical tone rich in original data. They transformed “correct but useless” content into content “with personality and flaws.” And Google forgave them, returning their traffic.

Navigating the Waters of “Navigational Intent” and Brand Building

An often overlooked aspect of advanced SEO is navigational intent. If people search for your name or your brand name on Google, this is the definitive and unassailable E-E-A-T signal. It means you are a real entity in the minds of users, not just a random page answering a generic query. But how do you get brand searches without spending millions on TV advertising or external campaigns?

The answer lies in creating “Linkable Assets” that are not necessarily linked, but searched for. You must give a proprietary name to your method, theory, or process. Don’t call it “SEO Strategy without links.” Call it “The Ghost Protocol of” or “The Semantic Resonance Matrix.” Write it everywhere on your site. Use it in your video titles (even if hosted only on the site). Use it in newsletters.

People, intrigued by this unique term they don’t find elsewhere, will start searching Google for “What is the Ghost Protocol?”. Google will see the query associated with your brand and your site. Boom. Authority generated from nothing. You have created a concept, a lexical entity. You have become the authority on that concept because you invented it. It is a perfect tautology, but algorithms like tautologies: they are easy to verify and confirm. If you are the only source for term X, then for the algorithm, you are the authority on X.

Advanced Technicalities for 2026 and Beyond

We cannot ignore the purely technical aspect, even if it is often overrated by speed fetishists. E-E-A-T is also communicated through code structure, visual stability, and accessibility. But here too, there is irony. Google says Core Web Vitals are a ranking factor. Yet, we constantly see very slow, heavy sites full of scripts ranking perfectly well if the content is strong and the authority is high. Don’t obsess over a 100/100 score on PageSpeed Insights if it forces you to remove elements that give personality and proof of experience to the site, like a heavy but authentic video or high-resolution images of your products.

Semantic HTML, however, is crucial. Use HTML5 tags properly and with intention. The <article>, <section>, <aside>, <figure>, and <figcaption> tags are not just decoration for purist developers. They help crawlers surgically distinguish “Main Content” from “Supplementary Content” and advertising. Rater guidelines insist heavily on the specific evaluation of Main Content. If your layout is confusing and based only on generic <div> tags, the algorithm might not understand where the article ends and the footer or sidebar begins, diluting the relevance of the text. Using the <address> tag for author contact details is another microscopic but useful signal for anchoring the entity to physical reality.

Finally, to end up in AI answers (the new AI Overviews), you must structure answers so they are easy for an LLM to “ingest” and regurgitate. Use direct questions as headings (H2, H3) and answer immediately in the next paragraph with a simple, declarative sentence (Subject, Verb, Object), followed by a list of points or logical steps. If you cannot use visual bullet points because your style doesn’t allow for them, use short, punchy sentences separated by periods. This “Q&A” format is the favorite food of LLMs, which look for high-confidence text fragments to extract and present as truth.

Concluding?

In conclusion, maximizing E-E-A-T in the AI era without external sources is an act of intellectual and technical rebellion. It means rejecting the idea that truth must be validated by third parties or majority consensus. It means asserting your digital existence through density, internal consistency, and the radical originality of your content.

Don’t wait for Google to give you permission to be authoritative or for another site to grant you the grace of a link. Take the authority. Build a network of content so interconnected, dense, and logically unassailable that the algorithm has no other statistical choice but to classify you as a node of truth in its graph. Use schema markup to declare your identity with the precision of a notary. Use Information Gain to say new things that no one else has the courage to say. And above all, stop trying to look like a neutral, soulless encyclopedia. Be biased, be specific, be embarrassingly and loudly human.

In a sea of synthetic, gray, perfect, and hallucinated content, the colorful imperfection, inconsistency, and lived experience of real human life is the only thing AI cannot replicate convincingly. And ironically, it is exactly what Google is desperately trying to find, even if its own algorithms, trained on mediocrity, sometimes struggle to recognize it at first glance. Do the opposite of what “content farms” do. Don’t scale to infinity. Go deep into the abyss. Don’t seek easy consensus. Seek argued dissent and the impossible niche.

And if all else fails, remember: even Google is just complex code written by imperfect humans (and increasingly by AI imitating those humans) desperately trying to understand and order the world. Sometimes, it’s enough to speak to it in its native language of structured data, semantic vectors, and logical tautologies to convince it that you are the king of your little digital kingdom, even if no one outside your walls knows it yet. Your authority begins where you decide it begins, not where a backlink ends.